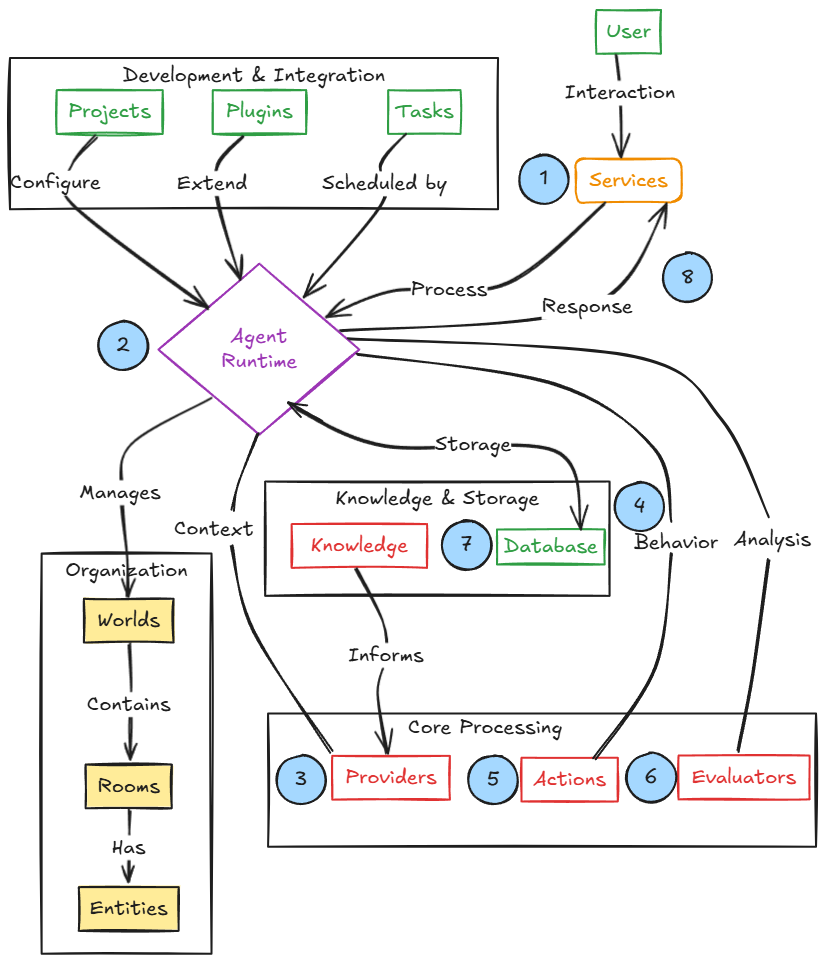

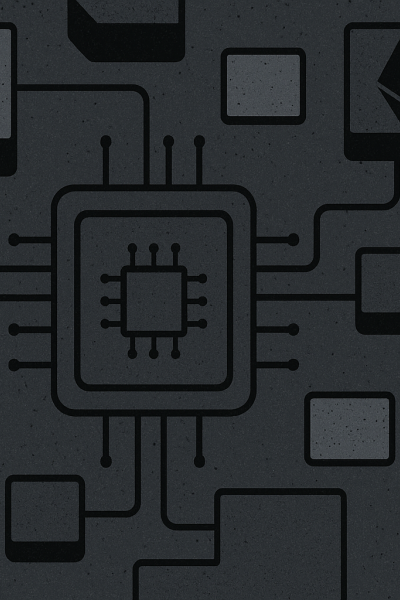

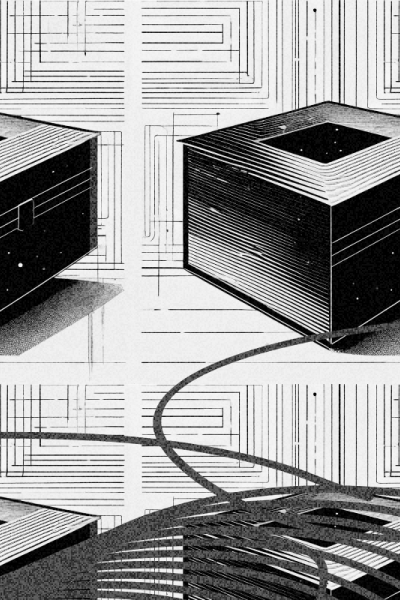

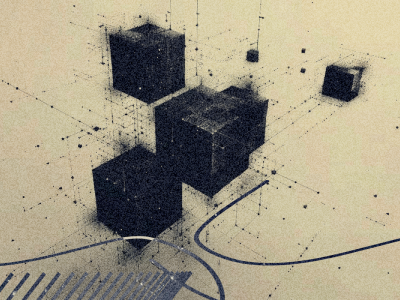

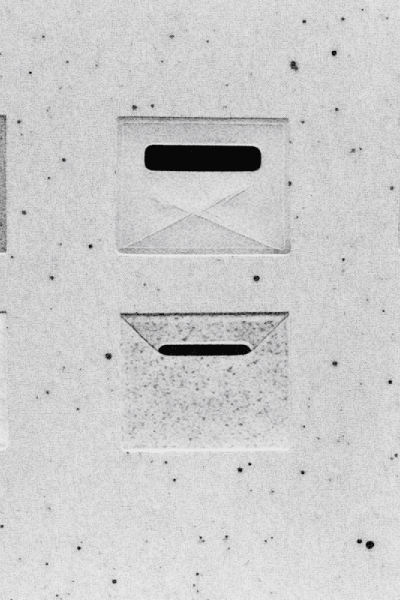

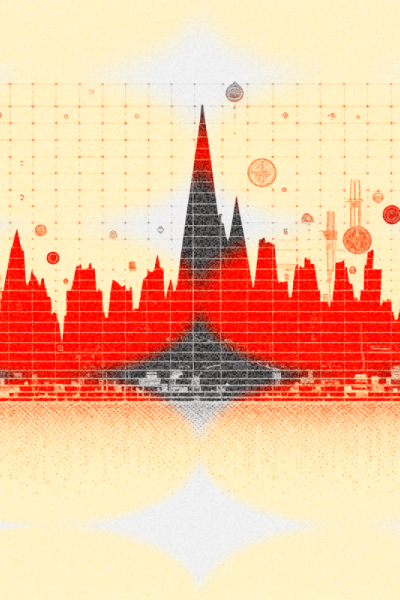

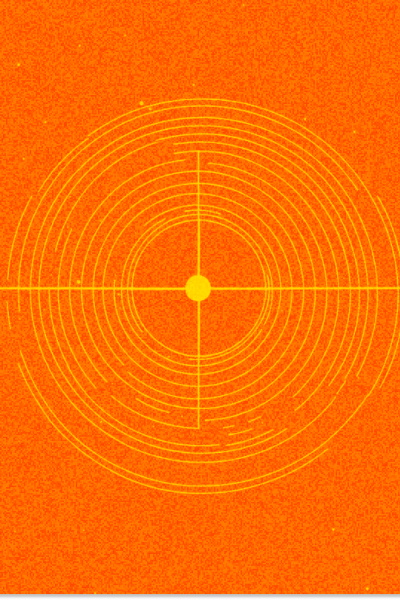

Based on the diagram, we can identify the following:

Core Components:

- Agent Runtime — The main component of the agent. It handles the database and coordinates other components.

- Services — This component connects agents to external services. Essentially, it’s the entry point for communication with agents. For example: Discord, X, Telegram.

- Database — The place where the agent stores everything it needs to remember. For example, who it talked to, what happened, what commands were executed, how different participants are connected, and its configuration settings.

Intelligence & Behavior:

- Actions — These are the things the agent can do: send transactions, answer questions, generate content, etc.

- Providers — Components that build the context for the agent’s LLM model. They collect and structure relevant information (e.g., user data, previous messages, system state) so the agent can make informed decisions.

- Evaluators — Cognitive components that analyze the agent's dialogues after they end, extract important facts and patterns, and update the agent’s knowledge base. They help the agent learn and improve from past experiences.

- Knowledge — A structured system for storing information and the agent’s long-term memory. It preserves facts, conclusions, and personalized data so the agent can use them in future interactions.

Structure & Organization:

- Worlds — Like "separate worlds" or projects where the agent operates. Similar to servers in Discord or workspaces.

- Rooms — Channels within a world where specific discussions happen — like chats, channels, or individual tasks.

- Entities — All participants within the rooms: users, agents, bots, and any other entities that can be interacted with.

Development & Integration:

- Plugins — Plugins that allow the agent to perform specific actions. For example, there are plugins for interacting with the blockchain or sending messages to Telegram.

- Projects — Prebuilt agent setups that are ready to use. They come configured with plugins, memory, roles, and logic.

- Tasks — The scheduler. It lets the agent run actions on a schedule or postpone tasks for later. (For example, tell the agent to check the ETH price daily and send a signal or even buy when a target price is reached.)

Workflow according to the diagram:

- Service Reception: The user sends a message via a platform (e.g., writes in a Discord chat or sends a message in Telegram), and the agent receives it

- Runtime Processing: The main component coordinates the response generation process

- Context Building: Providers deliver the necessary context

- Action Selection: The agent evaluates and selects appropriate

actions - Response Generation: The selected

actions generate the response - Learning & Reflection: Evaluators analyze the dialogue to understand what can be improved and use it to further train the model

- Memory Storage: New information is saved to the database (interaction history, analysis results, etc.)

- Response Delivery: The response is sent back to the user through the service

What does this provide? This creates a continuous operational loop: interaction → analysis → improvement. This mechanism enables agents to constantly learn and evolve through ongoing interaction.

It's starting to make sense, but there are still questions. So let’s take a closer look at the most important components in action.

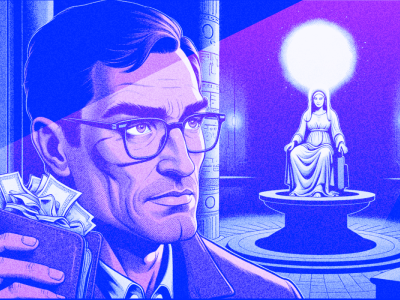

Service

The service acts as a layer between Eliza agents and platforms like Discord and Telegram.

Services handle integration with specific platforms (for example, processing messages in Discord or posts on X).

A single agent can manage multiple services at once and maintains separate context for each platform.

You can view the full list of active services and their capabilities here.

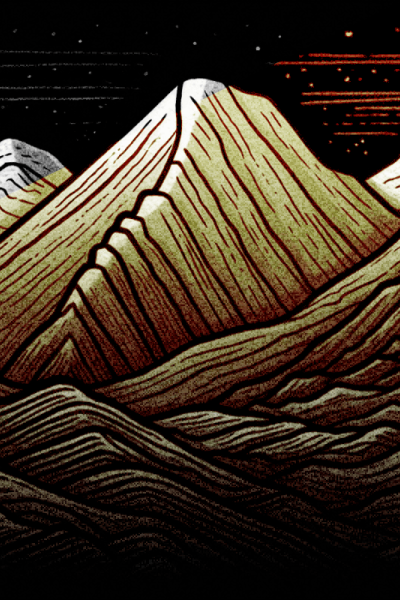

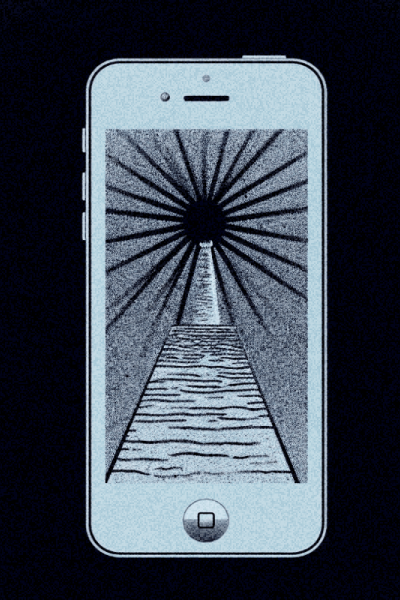

Agent Runtime

As mentioned earlier, this is the main component that receives messages from the service and manages the processing cycle — handling and responding to user messages or commands.

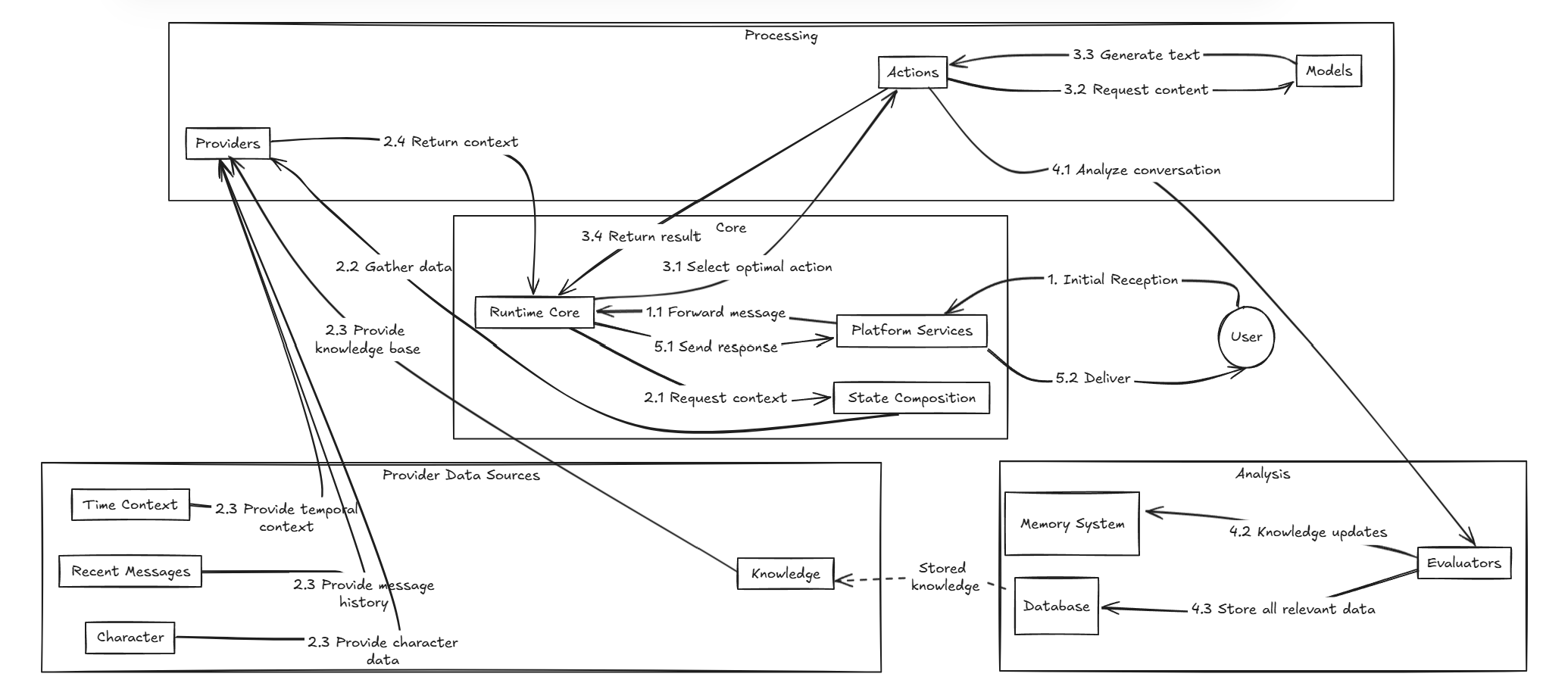

Let’s look at a detailed workflow diagram: